Retrieval Augmented Generation vs. Fine Tuning

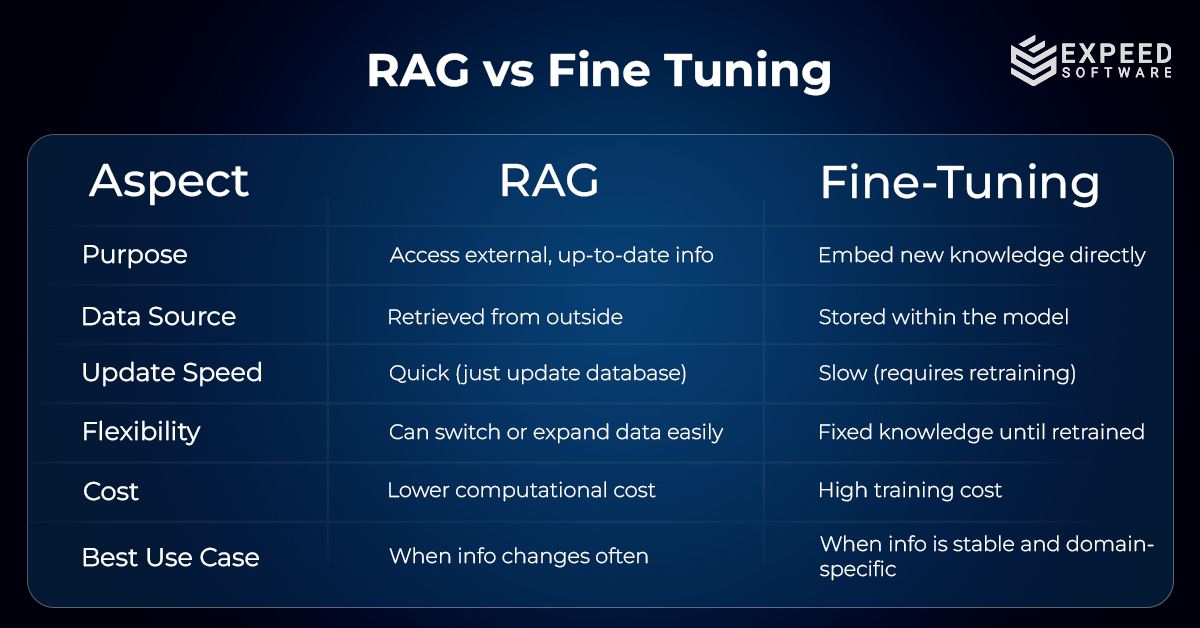

To understand why RAFT AI stands out, it’s important to look at how RAG and traditional fine-tuning work. Out of the available LLM adaptation techniques, RAG and fine-tuning were the two methods that were proven to be efficient. The best among both depends solely on the project and the intent of an LLM.

Retrieval Augmented Generation (RAG)

To understand how RAG works, let us look at a real-time scenario of a student who has already learned a lot and still stays close to his stack of books to depend on in case someone comes up with a new question. This simply means the student never blindly mugs up all the answers but chooses to stay up to date by referring to the sources available whenever necessary. This is exactly how RAG works.

The sole intent of Retrieval Augmented Fine-tuning was to create smarter language models without going through the turmoil of training them repeatedly. When prompted with a question, RAG looks for relevant information in an external database, knowledge base or document repository to spot the latest information related to the topic. These retrieved document snippets will be sent to a language model, helping it to form an answer based on the most recent and relevant information.

Such an approach makes RAG overcome the limitations of a language model that depends solely on the pre-trained data. Most LLMs like GPT or LAMA won’t be aware of the most recent information while prompted. This is where RAG stands out by seeking out real-time data when asked a question. One of the other perks of RAG is its flexibility. Without modifying the underlying structure of the language model, you can always update the external database.

Having said this, it can’t be said that RAG is a flawless approach. As RAG depends on dynamic data retrieval, it might sometimes encounter issues between choosing from relevant and irrelevant documents. Hence, the retrieval process requires fine-tuning to avoid fetching wrong documents when a question is asked. In short, RAG is fast and depends on updated data retrieval but might be subjected to returning distractor documents.

Fine-Tuning

Fine-tuning is a bit different from how RAG works. In fine-tuning, you will already have a ‘pre-trained model.’ This model will already be able to understand trillions of words, nuances, grammar, etc. As such a model will be already used to a big load of text datasets, your immediate next step will be to introduce it to a domain-specific dataset.

For instance, you could offer a collection of case studies or technical frameworks as a starting point for the model, eliminating the need to train it from the beginning. Such an approach provides a general-purpose model in a specific direction. It’s like enrolling a well-read individual into a specialized course. Such a training approach helps the model to tune itself according to the newly fed information instead of just storing it. Hence, fine-tuning provides a model of its specialized tone and accuracy. Such a model doesn't just look things up but can answer the questions directly and confidently, as the relevant knowledge is baked straight into its neural structure.

So, while fine-tuning makes an AI extremely sharp within its niche, it also limits its flexibility to adapt to new or evolving information. That’s where the need for a more dynamic, hybrid approach arises.

What is Retrieval Augmented Fine-tuning (RAFT)?

The limitations of RAG and fine-tuning gave rise to a new, smarter approach known as RAFT, or Retrieval-Augmented Fine-Tuning. The best way to define RAFT is to present it as an LLM adaptation process combining the benefits of both RAG and fine-tuning. Due to this, RAFT gets the adaptability of RAG and the precision of fine-tuning. The idea of RAFT is to create large language models that can reason like domain experts while staying up to date with recent happenings in the niche.

When prompted with a question, RAFT AI follows a process like RAG in the beginning. It will first access external sources to find matching documents. Once the answers are fetched, instead of using all of them to form an answer, RAFT trains the model to identify and ignore the non-relevant documents. By avoiding data that do not contribute to the right answer, RAFT thereby ensures maximum precision. RAFT follows a citation-based reasoning style. i.e., during training, the model learns to pick the most relevant portion from the retrieved document and cites that exact sequence while answering. This helps the model to answer prompts by improving both accuracy and interpretability.

Compared to fine-tuning, RAFT doesn't inject static knowledge into the model. Instead, it fine-tunes the model to 'think,' considering the context, weigh information and filter out the fluff. Such a style makes RAFT AI a popular choice for domain-specific scenarios such as scientific research or enterprise-level data systems, where both accuracy and freshness of knowledge are equally important.

Why does RAFT matter?

Wondering why RAFT is considered popular and the best choice out there for training AI models? The answer is simple. To stay relevant in an AI-driven world is no longer a luxury. Even the smartest model can go outdated considering how new layers of information are getting added every day to almost all domains. Though traditional fine-tuning can make a model smarter within a domain and RAG can fetch updated data, neither of these techniques ensures sustainable intelligence. This is where RAFT earns its significance.

RAFT acts as a bridge between knowledge consistency and knowledge evolution. It equips large language models with the ability to learn from fresh information without erasing what they already know. Instead of choosing between stability and adaptability, RAFT allows AI systems to embrace both.

For industries that demand precision and context-awareness, this becomes a game changer. In healthcare, RAFT helps models interpret emerging research findings while staying aligned with established clinical guidelines. In finance, it enables real-time decision-making based on current market patterns without compromising on analytical reasoning. In legal or compliance sectors, it ensures that models stay up to date with the latest regulations while maintaining interpretive accuracy.

Another reason RAFT stands out is its explainability. Since the model learns to cite relevant sources while answering, it doesn’t just give responses—it provides evidence-based reasoning. This makes RAFT-trained systems not only smarter but also more transparent and trustworthy, qualities that are critical as AI become an integral part of enterprise decision-making.

In short, RAFT isn’t just an upgrade in model training, it’s a shift in how AI evolves. It represents a move from static intelligence to living intelligence—models that continue to think, learn, and reason in sync with the world around them.