The Emerging Challenges

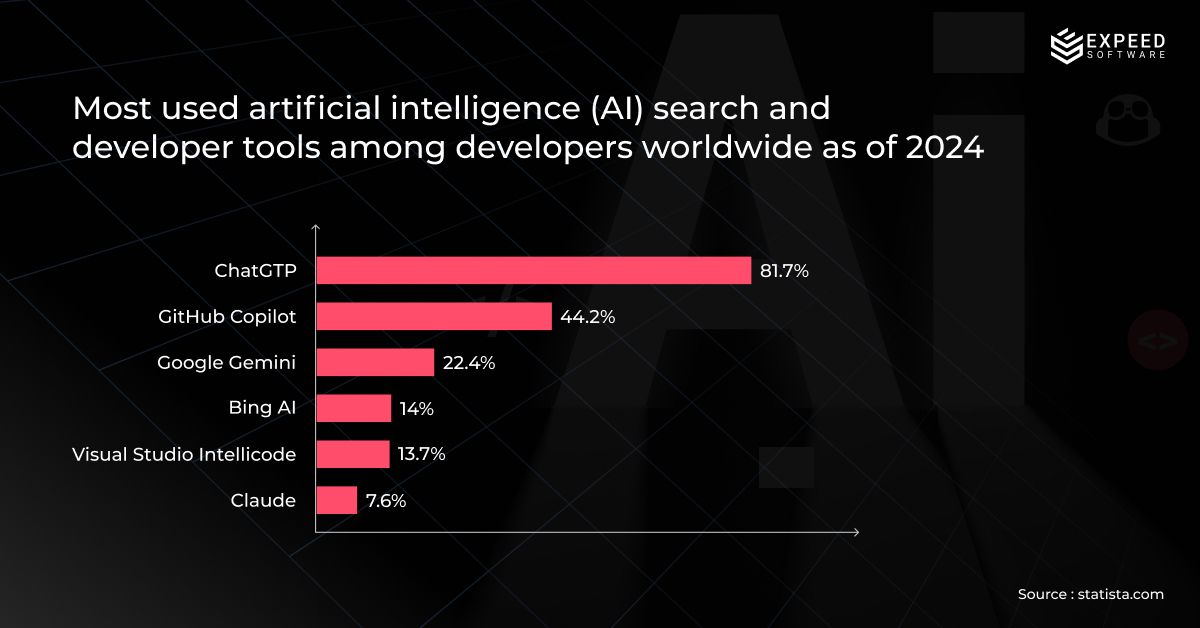

The rapid adoption of AI for developers in software development is unmistakable. According to Statista’s 2024 survey, 82 percent of developers regularly use OpenAI’s ChatGPT in their workflows, while 44 percent employ GitHub Copilot as part of their coding toolkit. This level of engagement highlights how deeply AI has penetrated everyday programming tasks around the world.

However, while the widespread use of AI coding tools accelerates development, it also brings new challenges that impact the quality and sustainability of software engineering practices. These emerging challenges require careful attention to ensure AI complements, rather than diminishes, a developer’s skills and judgement.

1. Knowledge without Depth

AI can produce functional code without requiring the developer to engage deeply with the underlying logic. Over time, this risks creating a gap between a developer’s ability to apply solutions and their ability to derive them.

2. Inconsistent Coding Practices

Different AI models are trained on different datasets, producing variations in structure, naming, and formatting. Without explicit alignment, these differences accumulate in a codebase, increasing maintenance overhead.

3. Reduced Analytical Engagement

The presence of a plausible solution reduces the incentive to explore alternative designs or assess trade-offs, both of which are crucial for architectural resilience.

4. Communication Gaps in Collaboration

When AI generates large portions of code, the rationale behind certain design decisions may be unclear, making it more difficult to explain, document, or justify choices during peer review or cross-team collaboration.

A Framework for Sustainable Developer Growth in the AI Era

To ensure that AI enhances rather than erodes a developer’s skill set, organisations and individuals need to adopt a structured approach that combines strong foundational knowledge, context-aware AI usage, and active translation of solutions across domains.

1. Reinforcing Core Knowledge

The effectiveness of AI assistance is limited by the user’s ability to assess its output. This requires a grounding in algorithms, data structures, design patterns, and system architecture.

Example:

An AI generates a function that sorts a large dataset using an O(n2)O(n^2)O(n2) algorithm. A developer with strong fundamentals will recognise that the approach is unsuitable for the expected data scale and replace it with a more efficient method such as merge sort or quicksort. Without this knowledge, the inefficient code may be deployed into production unnoticed, leading to performance degradation.

2. Contextual Evaluation of AI Output

A survey from Qodo reports that 78% of developers say AI coding tools improved productivity, yet 76% still don't fully trust the generated code, showing a clear mismatch between output and confidence. AI code automation must be reviewed in the context of the system where it will operate. This includes performance constraints, integration with existing modules, and compliance with organizational policies.

Example:

An AI proposes a Python script to transform incoming CSV files into JSON format.

Before deployment, the developer examines whether:

- The script complies with the team’s logging standards.

- It integrates with the message queue system already in place.

- It can handle files in the multi-gigabyte range without memory exhaustion.

Through this review, the developer identifies that the AI-generated solution loads the entire file into memory rather than streaming it, prompting a redesign before release.

3. Translation Across Domains and Contexts

Rather than accepting an AI output as a fixed artefact, effective developers re-express it in other contexts. This process reveals implicit assumptions and strengthens adaptability.

Example:

An AI produces a synchronous REST API endpoint in Node.js for retrieving customer orders. The developer rewrites it in Go as an asynchronous endpoint and integrates it with a message-driven architecture. During the translation, they discover that the AI’s original implementation did not handle timeouts correctly, which would have caused issues under high load.

4. Combining Manual and AI-Assisted Development

A balanced workflow alternates between manual implementation and AI-supported refinement. This maintains a developer’s analytical skills while using AI to accelerate repetitive or boilerplate work.

Example:

For a new microservice, the developer manually designs the data model and writes the core business logic. An AI development tool is then used to:

- Generate a test suite based on the API schema.

- Suggest index optimization for the database layer.

- Produce initial documentation for the service.

This approach ensures the critical thinking and design remain human-driven, while AI coding tools reduce repetitive engineering effort.

5. AI as a Discovery and Exploration Platform

AI can serve as a tool for knowledge expansion, enabling developers to explore new concepts, patterns, and technologies without leaving their development environment.

Example:

A developer working on a distributed caching problem uses AI to:

- Explain the trade-offs between write-through and write-back caching.

- Suggest frameworks that support each approach.

- Generate comparative benchmarks for small test datasets.

The resulting discussion with an AI development tool leads the developer to a hybrid approach that better fits the team’s operational requirements.

Building Resilience Through Deliberate Practice

The speed at which AI coding tools can produce working solutions often creates the illusion that proficiency is growing at the same pace. In reality, technical depth develops through deliberate practice—working through unfamiliar problems, debugging without hints, and exploring the “why” behind a solution’s behavior. When these moments are skipped in favor of instant answers, the underlying resilience that sustains a developer’s long-term growth weakens.

Resilient developers treat AI output not as a destination, but as a starting point for further refinement. They stress-test generated code under edge conditions, introduce controlled failures to observe recovery paths, and experiment with alternative implementations to uncover trade-offs. This process forces interaction with the mechanics of the code, deepening understanding while also building a library of mental patterns that AI alone cannot provide.

Equally important is maintaining a rhythm of independent problem-solving—tackling at least some tasks without AI assistance. This preserves fluency in navigating documentation, reasoning through unfamiliar APIs, and identifying subtle defects that automated systems might miss. Over time, the combination of AI-powered acceleration and human-led exploration produces engineers who can adapt to new technologies without becoming dependent on a single tool’s capabilities.

Long-Term Competence in the AI Era

The defining skill for developers in the coming decade will not be the ability to generate code quickly, but the ability to integrate AI-assisted outputs into robust, scalable, and maintainable systems. This requires:

- Constant reinforcement of foundational knowledge.

- Rigorous contextual evaluation of AI suggestions.

- Adaptation of solutions across multiple domains and environments.

- Retention of manual problem-solving capabilities.

Developers who approach AI as an extension of their reasoning process, rather than a replacement for it, will continue to grow in capability and value. The result will be software that benefits from the speed and versatility of AI while preserving the depth, judgement, and adaptability that only human engineers can provide.