This challenge became clear during the development of a full-stack application to share employee ideas built using two specialized AI agents: one responsible for the React frontend, and another for the .NET backend. Both were capable models, strong at generating isolated components. However, when placed together in a single development workflow, they didn’t behave like a unified team. They behaved like two independent contractors who had never met.

The front-end agent frequently produced data structures that didn’t exist anywhere in the system. The backend agent generated endpoints without referencing what the UI expected. Instead of complementing each other, the two agents functioned in parallel universes. This resulted in constant mismatches, repeated clarifications, and frequent rework. Anyone who has ever encountered an AI-generated field that was never defined, or an API call that returns data unrelated to the UI has experienced this fragmentation firsthand. The role of AI in software development will continue to increase in future and we had to find a way to build a structure that keeps everything aligned and predictable.

Where the Breakdown Truly Occurred

A full-stack application is inherently a dual system. One half thinks in terms of UI components, state flows, hooks, and interactions. The other half thinks in terms of models, business logic, validation, and endpoints. When these two layers do not share the same mental model of the application, inconsistencies become inevitable.

Considering the way we adapted AI in software development, the agents were not malfunctioning. They followed instructions precisely but only the instructions they were individually given. They did not share memory, context, or architectural understanding. They were not aware of each other’s responsibilities or decisions. Without a common source of truth, even the most capable models drift apart.

The absence of an overarching structure became a real bottleneck. The system lacked a single entity capable of seeing, maintaining, and enforcing the big picture.

Recognizing That AI Tools Need a Team Structure

The realization emerged during discussions around why the models were failing to remain synchronized. The problem wasn’t prompt quality or agent capability. It was architectural.

In human engineering teams, a system is never built by having every developer report directly to the CEO or product owner. Successful teams rely on structure:

- A technical lead to maintain coherence

- Shared documentation or source of truth

- Consistent architecture

- Clear division of roles

- Standardized contracts

The agents used to build the platform had none of these. They were intelligent, but isolated. They were skilled, but uncoordinated. And just like human developers without guidance, their work diverged.

This led to a critical insight: AI agents do not simply need refined prompts; they need a management system. A framework that establishes responsibility, distributes context, and ensures consistency.

This insight redefined the AI development workflow.

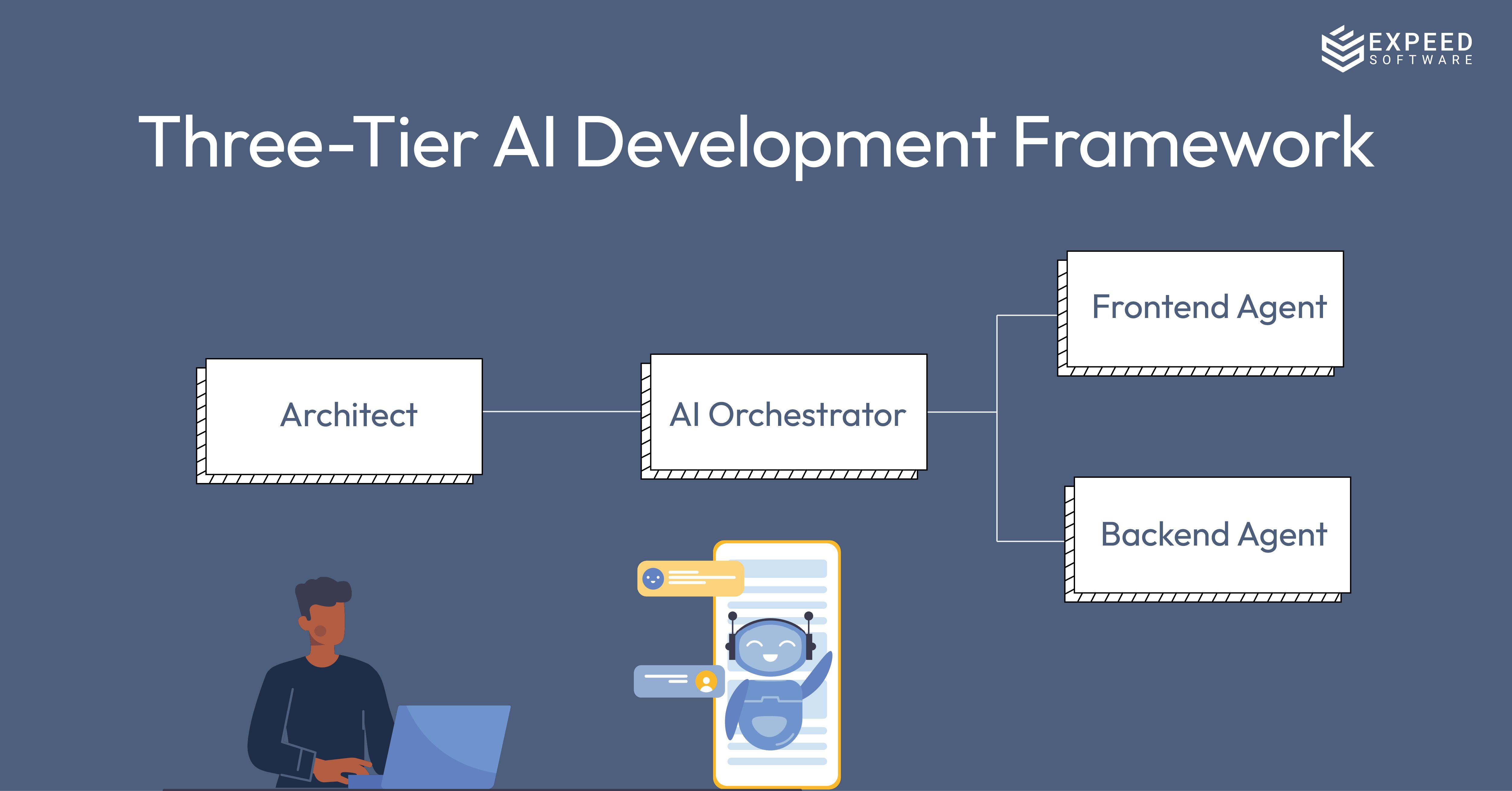

Building the Three-Tier AI Development Framework

The team restructured the development approach into three layers, each designed to solve a specific coordination problem. This marked the beginning of a more reliable, scalable, and predictable AI-assisted workflow.

1. The Human Architect

The human role shifted from constant supervision to strategic direction.

This role defined:

- The vision behind a feature

- The purpose of the requirement

- The high-level architecture

- The expected user experience

The architect no longer provided step-by-step instructions or rewrote model outputs. Instead, the architect served as the source of product and architectural intent, leaving the technical alignment to the system.

2. The AI Orchestrator (Central Gemini LLM)

This layer became the most transformative component of the workflow. The Orchestrator acted as a technical lead, something no individual agent could do.

It was given responsibility for:

- Maintaining end-to-end project context

- Understanding both frontend and backend perspectives

- Creating API contracts

- Ensuring consistent naming and data structures

- Delegating tasks to the specialized agents

- Preventing mismatches between layers

This model became the “brain” connecting the entire system. It understood what the architects envisioned and what the agents needed in order to execute that vision. It provided the missing leadership that ensured coherence.

3. The AI Specialists (CLI-Based Agents)

These agents remained the hands-on builders:

- One dedicated to the React frontend

- One dedicated to the .NET backend

However, the critical difference in this new structure was that they no longer received requirements directly from the human architect. All instructions came through the Orchestrator. This eliminated guesswork, prevented assumption drift, and ensured both agents were aligned with the same technical contract.

This three-part structure effectively mirrored a real engineering team, enabling the AI agents to function as coordinated members rather than isolated tools.

Putting the Workflow to the Test: Building the Profile Page

To validate the new system, the team worked on a feature central to the platform that was built - a user profile page. The workflow illustrated how dramatically the Orchestrator changed development.

The Architect’s Request

The requirement was defined at a conceptual, user-facing level:

“A three-column profile page displaying the user’s information, a list of submitted ideas, and recent activity.”

No technical details were provided at this stage. The architect intentionally avoided specifying routes, DTO structures, or component layouts. This allowed the Orchestrator to derive the technical architecture from the functional intent.

How the Orchestrator Broke Down the Requirement

Instead of generating scattered output, the Orchestrator produced perfectly aligned instructions for the backend and frontend agents.

Instruction to the Backend Agent

“Create a new endpoint at:

/api/users/{id}/profile

Return a UserProfileDto containing:

- User details

- List<Idea> → submitted ideas

- List<Comment> → recent comments

Query the database and populate all three.”

This instruction defined both the API structure and the data contract. It ensured the backend produced exactly what the frontend expected. No more, no less.

Instruction to the Frontend Agent

“Create a new route at /profile/:userId.

Build a three-column layout.

On load:

- Call /api/users/{id}/profile

- Render submittedIdeas in the center column

- Render recentComments in the right column.”

The frontend was given a clear route, data source, and rendering order, aligned exactly with the backend design.

The Impact of This Alignment

The Orchestrator ensured:

- Variable names were identical

- Data fields matched precisely

- The layout and API aligned

- No fields were invented

- No assumptions were added

- No mismatches occurred

This was the moment development shifted from corrective to generative. Instead of spending time fixing misunderstandings, the team spent time building.

From Corrections to Conversations

Before the Orchestrator was introduced, development was a loop of redoing work:

- Rewriting prompts

- Restating architecture

- Explaining the same concepts repeatedly

- Fixing backend/frontend misalignment

- Correcting fabricated object fields

After the Orchestrator, the dynamic changed entirely. The architect described outcomes. The Orchestrator planned solutions. The agents executed in sync. This way, the AI development became a conversation: structured, predictable, and scalable.

The system no longer produced chaos. It produced alignment.

Conclusion

There is a common belief that success with AI development comes from crafting precise prompts. The experience building the idea sharing platform revealed something different: the real advantage comes from structuring AI like a well-run engineering team.

When AI tools are placed into coordinated roles:

- The Architect defines intent

- The Orchestrator ensures alignment

- The Specialists generate code

The entire workflow becomes dependable.

The future of AI development will not be driven by a single powerful model acting alone. It will be driven by systems, teams of models working together under a unified architectural framework. True power lies not in individual prompts or LLM agents, but in the process that connects them.